Analytica’s Advanced Analytics group is researching the use of Applying Natural Language Processing (NLP) Models For Federal Use Cases, such as Meta’s OPT-175B and OpenAI’s Generative Pre-Trained Transformer 3 (GPT-3) language model for use at our clients. These models are trained using massive amounts of data and use Deep Learning to produce text that looks like they were written by humans. While GPT-3 code is not available to the public, you can make calls to the model via a publicly available API (https://openai.com/api/). On May 3, 2022, Meta (Facebook’s parent company) announced that they are making their OPT-175B model available as Open Source. (https://ai.facebook.com/blog/democratizing-access-to-large-scale-language-models-with-opt-175b/) This competing model may prove useful if we decide that we need direct control over the model.

The most likely can use for NLP models is for document summarization. Several of Analytica’s clients have large collections of technical documents that are difficult to effectively search or scan for connections. Both the GPT-3 and OPT-175B models provide this capability. Unfortunately, the GPT-3 model’s public (and beta) API limits the number of words to about 1500, so it may not be able to handle many of CMS’s larger reports.

Summarizing CMS Reports

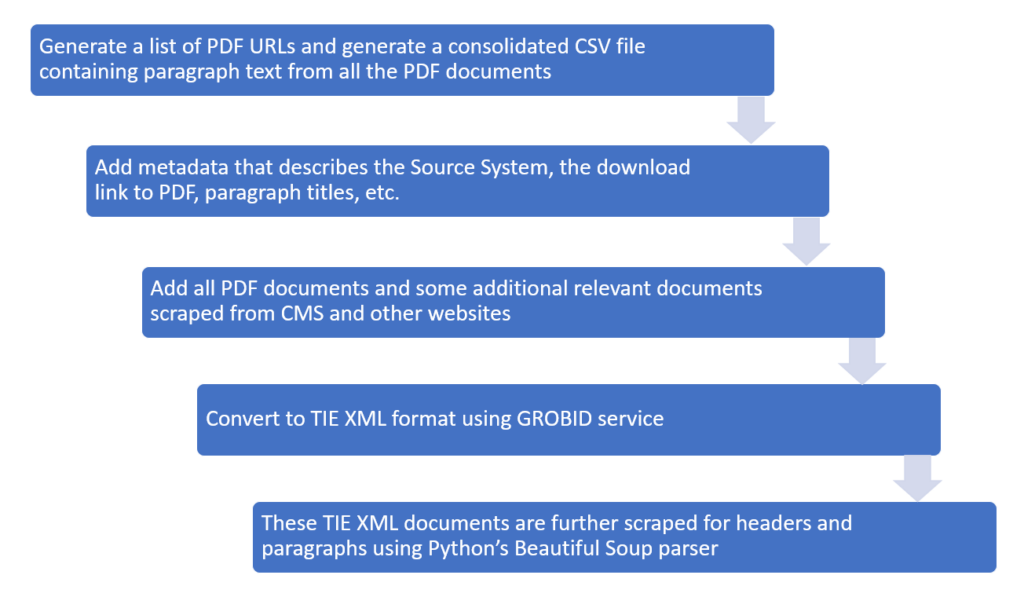

Meta recommends this approach for generating summaries of a collection of PDF files. Note that the data extraction process includes a lot more than just the document text.

Pipeline Process to Ingest CMS Technical Documents (PDF Files)

Once you have a collection of XML documents in this form, it is very easy to build a Python script to read them and create summaries. Here is an example of a call to the GPT-3 API:

import openai

openai.api_key = os.getenv(“OPENAI_API_KEY“)

document_text = “(text read from the document)”

response = openai.Completion.create(

engine=”text-davinci-002“,

prompt=”Summarize this text:\n\n” + document_text,

temperature=0.7,

max_tokens=64,

top_p=1.0,

frequency_penalty=0.0,

presence_penalty=0.0

)

As you can see, the call consists of 7 settings:

| engine | OpenAi offers 4 engines: text-davinci-002 is the most capable GPT-3 model. (See https://beta.openai.com/docs/engines/gpt-3 for details) text-ada-001 is the fastest and cheapest |

| prompt | A text string with the question. For summarization tasks, the document text is embedded in this string. Note that this string is limited in length. |

| temperature | Used to tell the model how much risk to task in computing the answer. Higher values mean the model will take more risks. |

| max_tokens | The maximum number of tokens to include in the answer. 16 is the default. |

| top_p | “Nucleus sampling” – Limits the number of results to consider. 1.0 here means that 100% of the results are considered, so the temperature setting is the only one that limits the return values. |

| frequency_penalty | Limits the likelihood the summary response will repeat itself. 0.0 is the default. |

| presence_penalty | Controls the number of new tokens that will appear in the response. 0.0 is the default. |

Next Steps

The biggest issue in using these models for document summarization is the limited number of words that can be summarized at a time. Analytica is looking at ways to work with and around this limitation (e.g., break the document into shards, summarize the shards, and then summarize the summaries) but a consistently high-quality result has not yet been found.